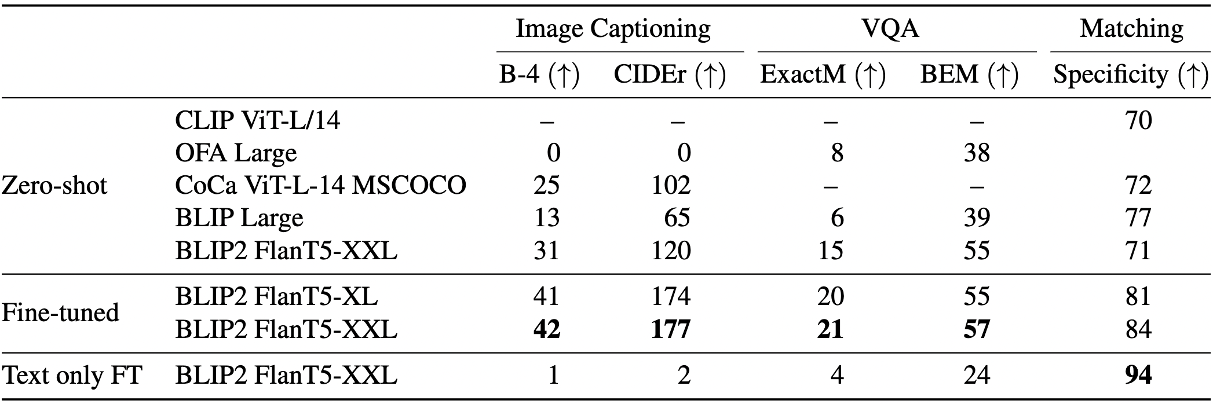

Breaking Common Sense: WHOOPS!

A Vision-and-Language Benchmark of Synthetic and Compositional Images

arXiv Medium

🤗

Dataset🤗

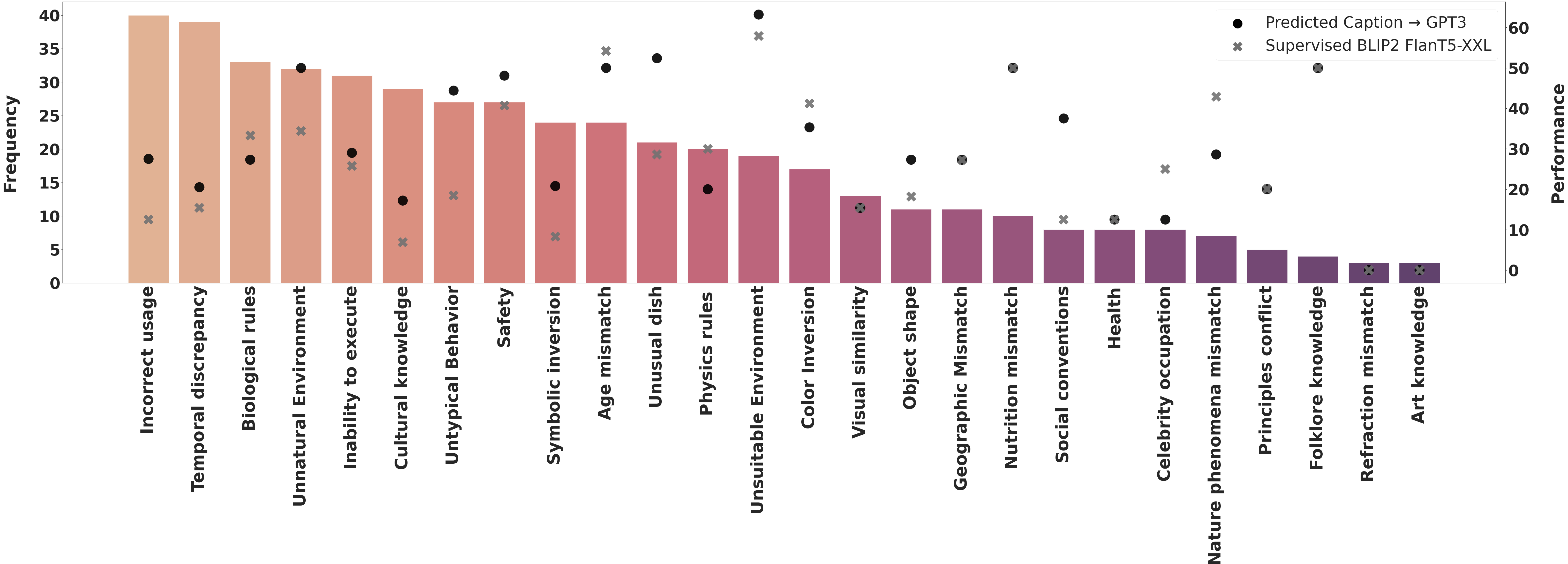

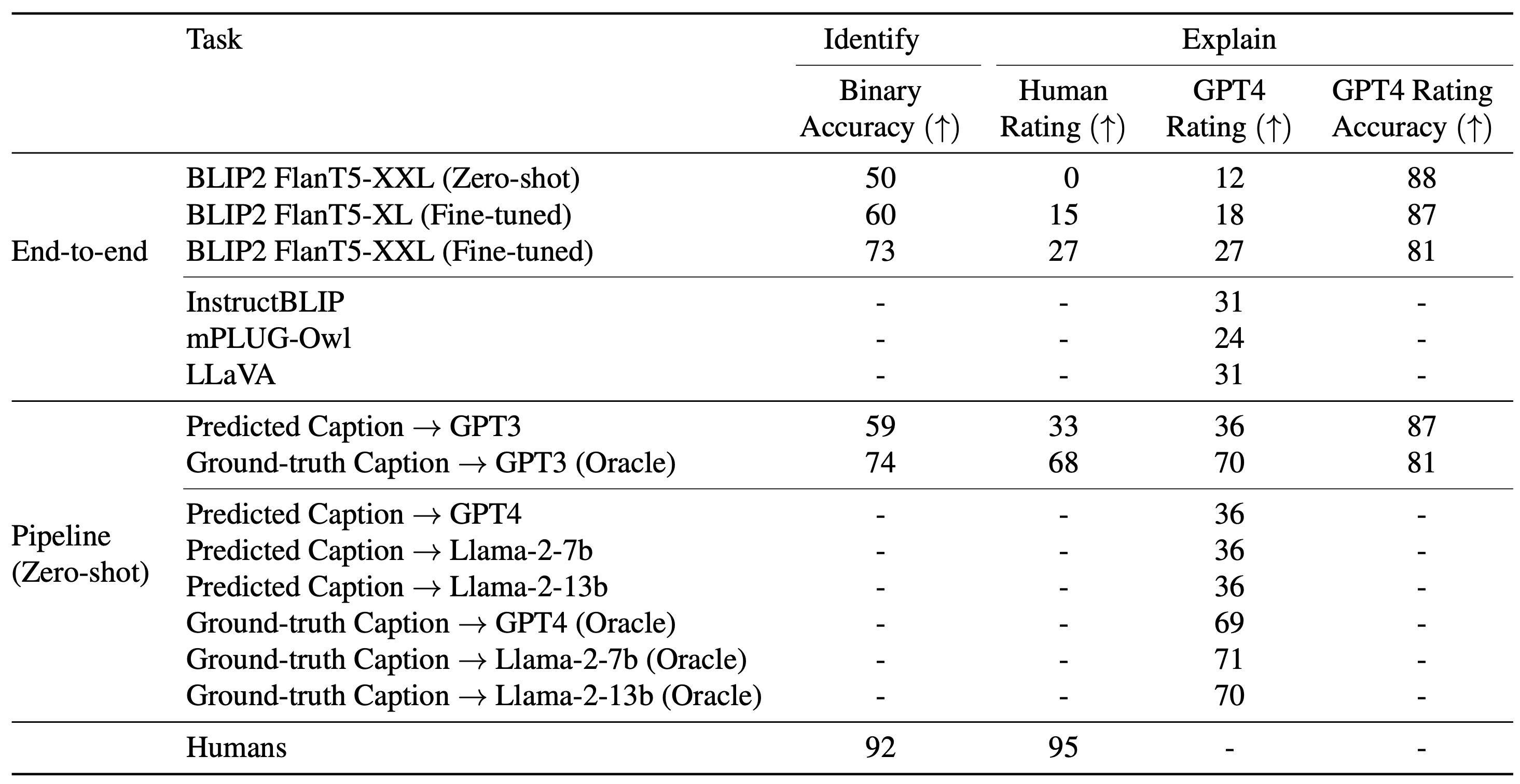

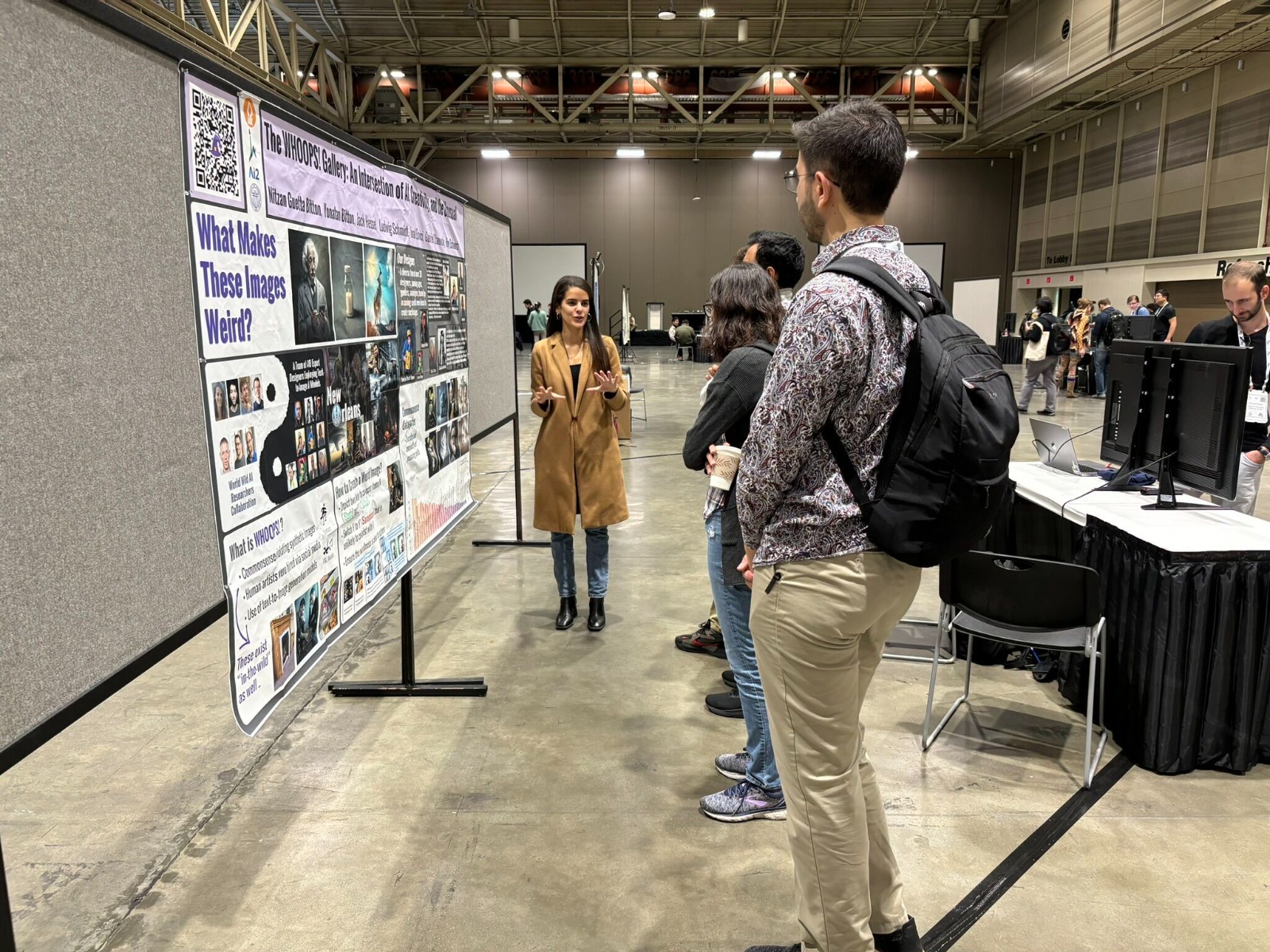

Explorer Tasks Evaluation Explanation of Violation Evaluation ICCV poster NeurIPS poster🤗

Analysis Dataset🤗

Analysis Explorer